Key Considerations for Building an AI Chatbot for Manufacturing Analytics

Discover the essential steps to build an AI chatbot for manufacturing analytics. Learn how to refine database connections, integrate business context, and deploy effectively. Transform your data insights with these key considerations.

In the manufacturing world, data analytics is crucial for gaining a competitive edge. For a company producing galvanized steel parts, real-time insights on production metrics, operational efficiency, and quality control can make all the difference. While this manufacturer had a robust database filled with data captured through sensors and industry automation, their existing dashboards were limited. They could view basic summaries, but drilling down to ask specific, dynamic questions was nearly impossible.

Enter the Large Language Model (LLM) Bot – an analytics powerhouse designed to bridge this gap by providing an interactive, flexible, and conversational approach to querying data. Leveraging an advanced language-to-SQL microservice layer within their ERP system, the company has unlocked a whole new dimension of data insight. Here’s how they did it and how others can replicate this approach to empower their own data-driven transformation.

Understanding the Database Structure

The first critical step in implementing an LLM Bot for any manufacturing analytics project is understanding the structure of the database and identifying which tables are essential for the relevant queries.

Challenges: Manufacturing databases often contain a myriad of tables, some of which capture irrelevant or redundant data. Including these tables in LLM Bot queries would result in noise, reducing the quality of insights.

Solution: Working with the database team, the company identified only the core tables crucial for tracking production, quality control, and operational metrics. This selection was carefully filtered to ensure that only relevant tables were used, significantly improving query accuracy

Describing Table and Column Names

Table and column names in industrial databases are often abbreviated and non-descriptive (e.g., “tbl_gvp” instead of “galvanized_part_inventory” or “qtl” for “quality_test_log”).

Challenges: Without clear naming conventions, understanding table structures and columns can be challenging for an LLM.

Solution: An ontology was built describing each table and column in simple, descriptive language. This guide was embedded into the LLM's configuration so that it could understand each data element's purpose and the context of queries, allowing users to ask natural language questions without worrying about technical jargon.

Providing Business Context for Data Relevance

Beyond technical descriptions, the LLM Bot was trained with the company’s specific business logic and goals.

Challenges: Standard LLM models may lack the specific business context needed to interpret data accurately for unique operational needs, such as manufacturing defect rates or material efficiency.

Solution: The manufacturing context was integrated into the LLM Bot, explaining the unique terminology, KPIs, and priorities. By doing so, the LLM Bot could interpret user questions with accuracy and respond with relevance. For instance, a question like “What was the defect rate last quarter?” would yield insights about production quality instead of merely counting defective parts.

Splitting Tasks Between Multiple LLMs

Designing the LLM Bot with specialized sub-LMMs to handle distinct tasks streamlined the query process.

Challenges: A single LLM trying to understand and respond to both language processing and SQL logic can result in bottlenecks.

Solution: A two-part LLM approach was used: one LLM interprets user questions and converts them into SQL queries, while the other interprets SQL output and responds conversationally. This specialization ensured efficient query conversion and enhanced accuracy in responses.

Implementing Memory for Improved User Experience

Memory was incorporated to store previous queries, allowing users to follow up on prior questions seamlessly.

Challenges: Without memory, users would have to repeatedly rephrase questions for ongoing queries.

Solution: The LLM Bot maintains a session-based memory, allowing it to recall recent questions and responses. This feature helps users ask follow-up questions, making complex data exploration more intuitive, efficient, and user-friendly.

Injecting Schema and Sample Rows for Enhanced Accuracy

The schema and sample data rows were injected to provide real-time reference points for the LLM.

Challenges: Without example data, the LLM could misinterpret table structures or column data types.

Solution: Injecting table schemas and a few sample rows into the LLM’s configuration improved its ability to generate accurate SQL queries based on actual data structures, which in turn reduced errors and misinterpretations during analysis.

Deploying the Microservice and Ensuring Continuous Testing

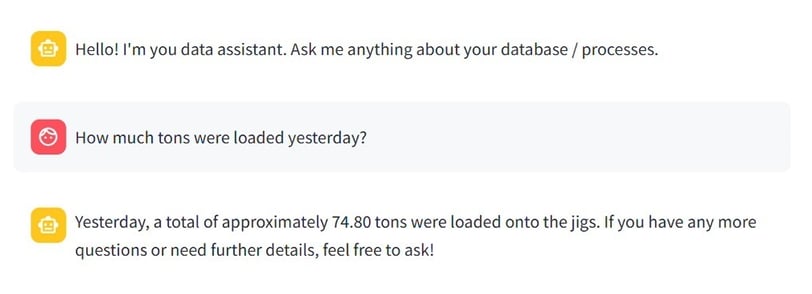

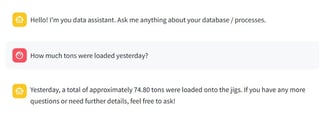

Deployment of the LLM Bot as a microservice within their ERP Next chat interface allowed users to access analytics insights conversationally.

Challenges: Manufacturing environments are dynamic, and data structures can change frequently.

Solution: Continuous testing and model fine-tuning were implemented to account for changing data structures or new tables in the ERP. Regular audits ensure the LLM Bot adapts to changes, maintaining its accuracy and relevance.

Key Tips to note for Implementing an LLM Bot Analytics

Define Scope Carefully: Avoid connecting all database tables at once. Choose relevant tables and columns that provide actionable insights.

Use Descriptive Naming Conventions: If you’re working with nondescript names, create a glossary and inject it into the LLM context to avoid confusion.

Implement Memory Cautiously: Use memory for session-based queries only, especially in environments with high data sensitivity.

Leverage Schema Injection: Always provide the schema and sample data for more accurate SQL query generation.

Test Regularly: Continuous testing is crucial as manufacturing data structures change with time and new operational needs.

The addition of an LLM Bot transformed how this manufacturing company interacts with its data. Moving beyond static dashboards, they now have a dynamic, AI-driven tool that lets them ask specific questions and gain insights quickly and intuitively. By using a microservice with language-to-SQL translation within their ERP chat interface, they have harnessed the power of conversational AI for real-time, actionable insights.